AI Needs Better UX

Using a Wardley Map to Find Innovation Opportunities for GenAI

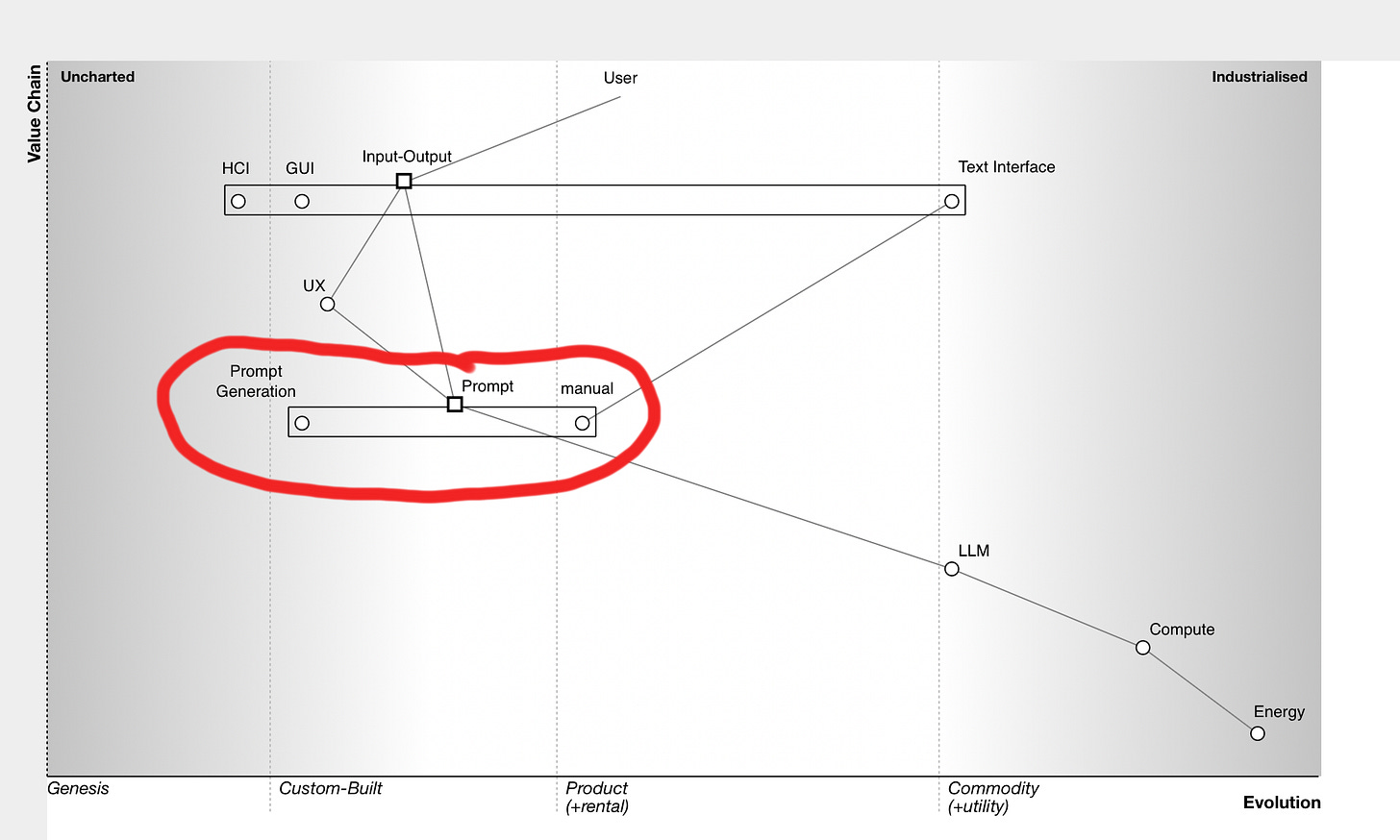

My and likely your information feeds have been full of GenAI chatter for some time now. I’m surprised that the discussion is still stuck on general models, and other parts deep in the value chain. I miss the discussion on the parts in the value chain that are more likely to produce innovative and differentiating products.

To better explain, I will use a Wardley Map. If you are wondering why I chose this method, I will explain it further down this article. But let’s look at the map and start at the bottom because there is currently the center of discourse. The public conversation focuses on energy, compute (data centers), and LLMs (foundational models). Energy and compute are an established commodity. If you’re not in the data center or energy business, you should not aspire to be innovative in this field. You pick a provider, as you did before.

LLMs are a commodity

I also consider LLMs a commodity. Everybody can choose between several nearly on par APIs. Again, if you’re not in the business of offering LLMs as your core business model, why invest there? Given the rapid decline of key missing differentiators between models, I don't see a reason for the attention. You will likely switch the API endpoint sometimes, like you change your energy provider — an operational act, not an innovation investment.

Additionally, it’s currently unclear whether creating LLMs can be profitable in itself. All LLM creators are burning astonishing amounts of money, without a clear USP. But let’s not get distracted.

Looking at the user

Let’s look at the other end, close to the user. Currently, most interactions with GenAI are through prompts in text interfaces. We are back in DOS times. But instead of getting an error message for a lousy prompt, we get a lousy answer. Garbage in – Garbage out.

What's missing are new concepts on how we interact with GenAI. Neither on the UX (flow of interaction) nor the UI (interaction model) of GenAI enabled products I see much investment. Looking at GenAI enabled products, I see prompt-boxes or ✨-Buttons. Even text manipulation feels like using a fax conversation for editing. There is a lack of new concepts in how we interact with GenAI. A great place to invest and differentiate with approaches fitting a customer need.

What about prompting?

Prompting as a craft is currently a tricky investment. I’m confident prompts will increasingly be automatically generated and constructed. That means we will see more customized, but also productized (e.g. RAG as a service) approaches to abstracting and enriching prompts through generators.

As interaction models change, less sophisticated prompt skills will be needed. I don’t see that prompting will be the number one skill of the general workforce. It’s the echo of “everybody needs to learn coding” discussion. Understanding how these systems work will be necessary, but only a fraction of people need to be specialists. And only being able to write a good prompt won’t be enough for these specialists. Investing there for engineering roles is essential, but I don’t think that’s news for anybody.

We need new interaction concepts and metaphors

The Desktop and Application metaphors added a level of abstraction to the OS interface, making computing accessible to everybody. It opened a vast market for new products. Currently, we miss this level of abstraction for GenAI, we are in the command line area once again.

We must invest in unique User Experience (UX) and Graphical User Interfaces (GUI) to create innovative and valuable products. New metaphors and concepts will drive differentiation in interacting with AI-supported features, fitting to the unique customer problems a product solves.

Pushing this further, another area of investment is the development of new approaches in general Human Computer Interfaces (HCI). Yes, we have voice interfaces for GenAI systems, but currently, they are a voice → prompt interface that lacks a lot of complexity in voice (e.g., tone, non-lexical sounds, interruptions). We also see early hints of multi-model (e.g. voice, pointing, camera, gaze) interaction modes as Google shows in Project Astra. But this is a technology demo showing off a model’s capabilities rather than a product with a clear scope.

Looking again at the entire map, it’s clear that if you want to invest in and provide valuable new capabilities to your customers through AI-enabled products, you must concentrate on the interaction models that fit your specific customer situations. Focus on their situations and look for opportunities your current deterministic approach can’t solve. The most significant difference to your competition will be the interaction with a GenAI that blends naturally into your product usage. For sure, it won’t be the next generation of Clippy.

Why a Wardley Map?

I approached this topic with a Wardley Map to help me think through this topic. It started with an initial wobbly thought that there is a lot of discussion around GenAI in the product space, but not many innovative products are surfacing. Mapping the topic helped me clarify my thoughts and decomposing entangled threads. I could start to argue with myself by mapping → looking → reflecting → mapping. After starting to write this article, by moving between writing and mapping, I had two modes of thinking that informed each other.

Mapping the topic out, it became apparent that everything connected to the interaction was open to innovation. There are no common patterns beyond the text interface when interacting with GenAI. The space of UX, UI, and prompt generators is the field where product teams can make a difference for their product capabilities.

Another benefit is that I can present my high-level thinking with the map. My mental model is represented in one picture. Even without reading the text, the focus of my reasoning is clear. You might disagree with my mental model, which is fine. But it’s a great starting point to discuss a complicated topic like this. Even better, you might identify things I have missed by looking at the map.

A map is a great discussion starter

The map enables us to explore specific details and challenge our understanding of this topic. For example, you could point at a component to discuss a detail, suggest a change in the value chain, or question the evolution of components. Our discussion would shape our shared understanding, which we would document by updating the map. If we fundamentally disagree, we could map alternatives and compare them to refine our mutual understanding.

Try this with pure conversation. While talking, we can get lost in details, fighting over specifics while neglecting that we might have a different mental model. We won’t find our way back to the bigger picture.

Supporting a conversation with a map helps to zoom in and out of a topic.

Is “What are we doing about AI?” an ongoing discussion in your organization?

I would love to hear about your approach.

Or do you have another complicated ongoing discussion at your organization?

Let’s chat if you would love to explore this with a map.

I wonder for some time now why only very few people mention the bad user interface of chat bots and chat UIs in general. There simply are no affordances, as Don Norman would have said!

I think some of the opportunities that AI offers are in the user experience layer—it presents the potential of an interface that can adapt its paradigm to the user's conceptual model rather than, at present, where users must adapt their conceptual model to that of the application.

Text chat isn't its final form, but I don't know where it will evolve or whether it will become more commonplace than the GUI systems we use today. Will adaptive GUIs be a thing? Interacting with humanoids? Or something else?